LVM Snapshots - Going surprisingly great!

The previous post about them...

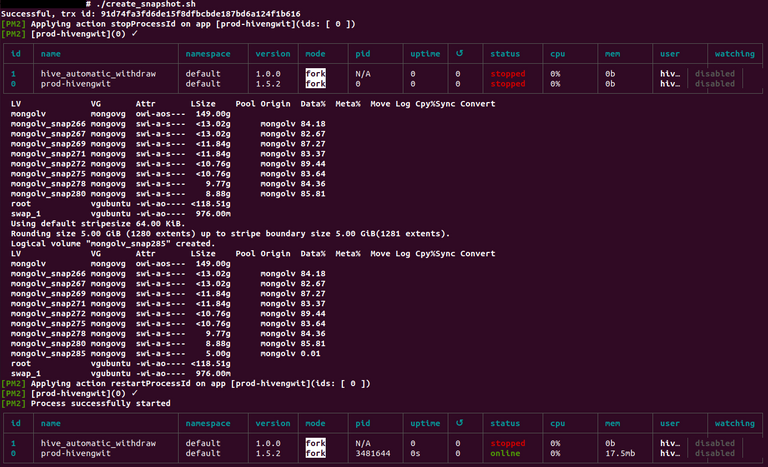

In this post I have introduced how I am doing snapshots for HE.

# lvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

mongolv mongovg owi-aos--- 149.00g

mongolv_snap267 mongovg swi-a-s--- <13.02g mongolv 85.59

mongolv_snap269 mongovg swi-a-s--- <13.02g mongolv 82.29

mongolv_snap271 mongovg swi-a-s--- <11.84g mongolv 86.69

mongolv_snap272 mongovg swi-a-s--- <11.84g mongolv 84.62

mongolv_snap275 mongovg swi-a-s--- <10.76g mongolv 87.40

mongolv_snap278 mongovg swi-a-s--- 9.77g mongolv 88.62

mongolv_snap280 mongovg swi-a-s--- 9.77g mongolv 83.33

mongolv_snap285 mongovg swi-a-s--- <6.67g mongolv 86.74

I have set an additional stripe of 3-disks to deal with the main stripe. And given the database is currently at around... 42GB, the snapshot usage of 13 GB for 20 days ago, is quite nice!

# df -h /var/lib/mongodb

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/mongovg-mongolv 146G 42G 97G 31% /var/lib/mongodb

I still need to write my scripts to do this, but because it's something trivial to me, I haven't been prioritizing it. The idea will be to just guess from the available space, on the volume group, if you can still keep a snapshot or you need to delete one. That's the ultimate objective, but likely will be dumber than that.

# pvscan | grep mongovg

PV /dev/sdd VG mongovg lvm2 [<50.00 GiB / 336.00 MiB free]

PV /dev/sde VG mongovg lvm2 [<50.00 GiB / 336.00 MiB free]

PV /dev/sdf VG mongovg lvm2 [<50.00 GiB / 336.00 MiB free]

PV /dev/sdg VG mongovg lvm2 [<50.00 GiB / 21.10 GiB free]

PV /dev/sdh VG mongovg lvm2 [<50.00 GiB / 21.10 GiB free]

PV /dev/sdi VG mongovg lvm2 [<50.00 GiB / 21.10 GiB free]

How to Delete an LVM Snapshot?

This is quite simple, just issue the following command... You don't need to unmount or stop anything in this process. The snapshot is just holding data from when before you took the snapshot, so anything you will be discarding is not even "active".

# lvremove /dev/mongovg/mongolv_snap267

Do you really want to remove and DISCARD active logical volume mongovg/mongolv_snap267? [y/n]: y

Logical volume "mongolv_snap267" successfully removed

And then the space available increases:

# pvscan | grep mongovg

PV /dev/sdd VG mongovg lvm2 [<50.00 GiB / 336.00 MiB free]

PV /dev/sde VG mongovg lvm2 [<50.00 GiB / 336.00 MiB free]

PV /dev/sdf VG mongovg lvm2 [<50.00 GiB / 336.00 MiB free]

PV /dev/sdg VG mongovg lvm2 [<50.00 GiB / 25.44 GiB free]

PV /dev/sdh VG mongovg lvm2 [<50.00 GiB / 25.44 GiB free]

PV /dev/sdi VG mongovg lvm2 [<50.00 GiB / 25.44 GiB free]

How to Restore an LVM Snapshot?

This is the part that is not so straightforward. First you need to stop the APP, DB and then if you can unmount the filesystem. It makes it easier...

The merge (aka restore) process is quite complex. The command to do it is as such:

# lvconvert --mergesnapshot <snapshot_logical_volume>

After your merge, any further (newer) snapshots become useless. (this is because snapshots only hold data from when they were taken, hence if they were taken in the future, data is not up to date).

More when I get into getting a script done...

But this should be enough for most geeks to get started. If not, let me know, and I can help. Bread and butter of the day for me.

If you have any infrastructure benchmarks you would like to run, please let me know. I can help!

Troubleshooting and massively parallel processes are some of the daily jobs I need to deal with. I might be able to help you with the most complicated infrastructures on the planet. OS wise, and devices. Tricky questions too...

Wondering if you need to run local or on the cloud? I can help too. I might be crossing some barriers here. But let's make it generalistic enough they can't interfere with my line of work.

🗳 Vote for Hive-Engine Witnesses here!!!

If you like this work, consider supporting (@atexoras.witness) as a witness. I am going to continue this work as much as I am permitted to and also because it's really great fun for a geek like me! 😋😎