Stepping up the AI game | Avina Splintershort

I decided to take another step down the rabbit hole of AI generated content. I've been playing around with AI images for a while, and with AI videos more recently, and wanted to try the best tools out there to see what I could make.

This was my very first attempt at creating a "mini film" (or "Splintershort"). Note that I had zero experience with video editing prior to this, so please don't be too harsh when reviewing the final result... :)

Starting off, here's the end result, which took about 3-4 hours to make from start to finish. Further below, I explain the process I went through and what I'm going to be trying next.

Tools

For this project I did some research on the various tools out there (there are so many!) and settled on just going with the "industry-leading" ones, specifically I used:

- ChatGPT for script support and storyboarding (https://chatgpt.com/)

- Midjourney to create consistent high fidelity images (https://www.midjourney.com/)

- Runway to animate the scenes (https://app.runwayml.com/)

- Freesound for free sound effects (https://freesound.org/)

- Pixabay for the music track (https://pixabay.com/)

- Clipchamp to stitch the video together

Base Character & Storyboarding

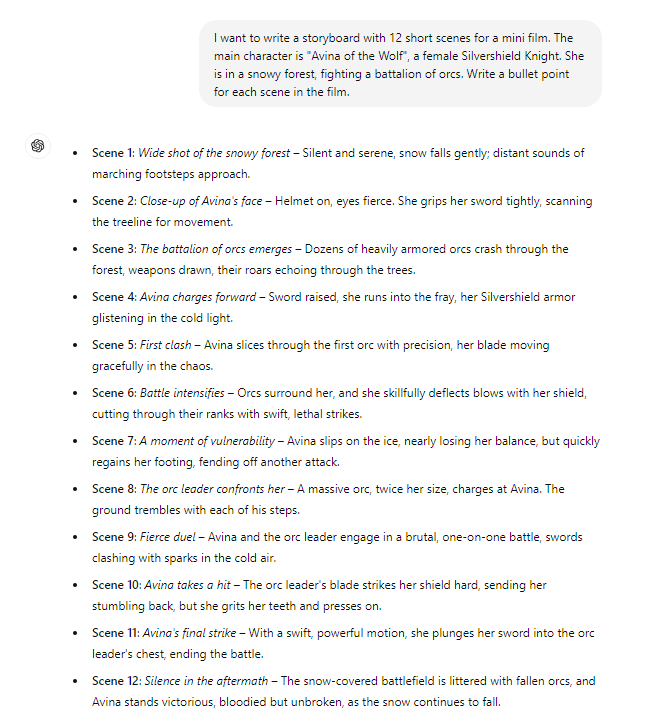

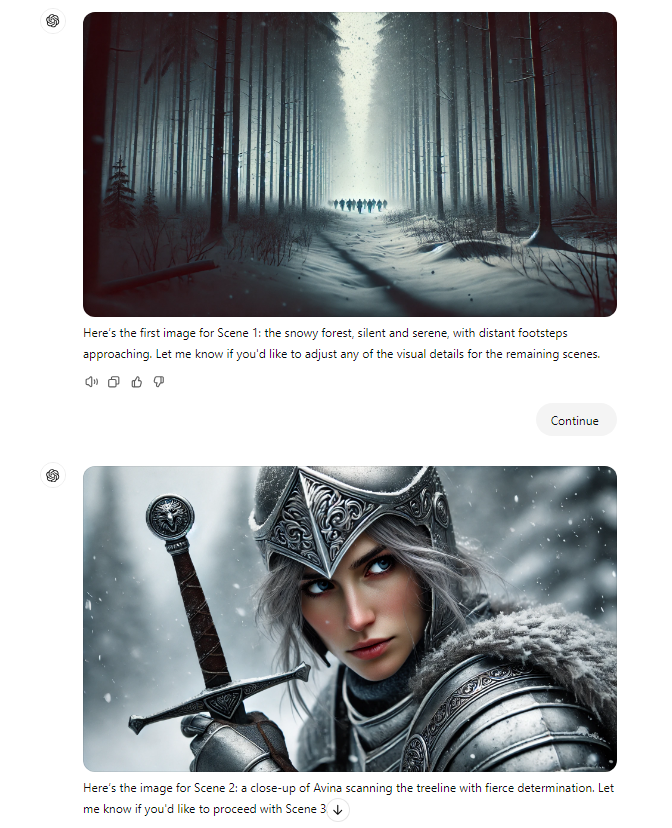

The first step was to create a story. I picked one of my favorite characters in #Splinterlands, Avina of the Wolf, and started off by getting ChatGPT's help to create a short storyboard and start visualizing the various scenes. This can be easily achieved by giving ChatGPT a premise and clear guidelines (e.g. "12 short scenes for a mini film"), and then asking it to create consistent visual representations of each scene.

After a bit of back and forth, I had the basis for the scenes I wanted to use and it was time to refine the images.

High fidelity images

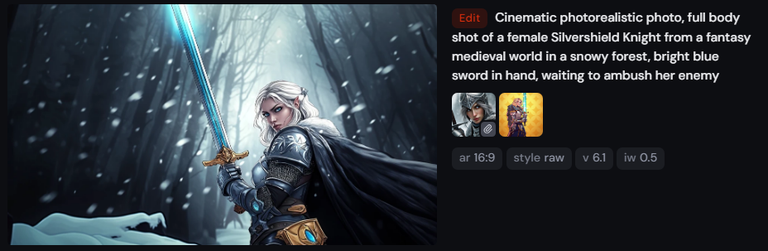

After doing some research, I concluded that Midjourney would be the best tool for the job as it creates beautiful imagery and has tooling that enables for image consistency as well as editing.

In Midjourney, you can provide images as part of the prompt and specify whether those are character or scene references. By taking the original art from Splinterlands for Avina as the character reference, using the ChatGPT image as scene reference, and providing some more details, I was able to start refining the image of Avina to be closer to what I wanted.

After a few iterations and experiments, I landed on an image baseline and style that I liked.

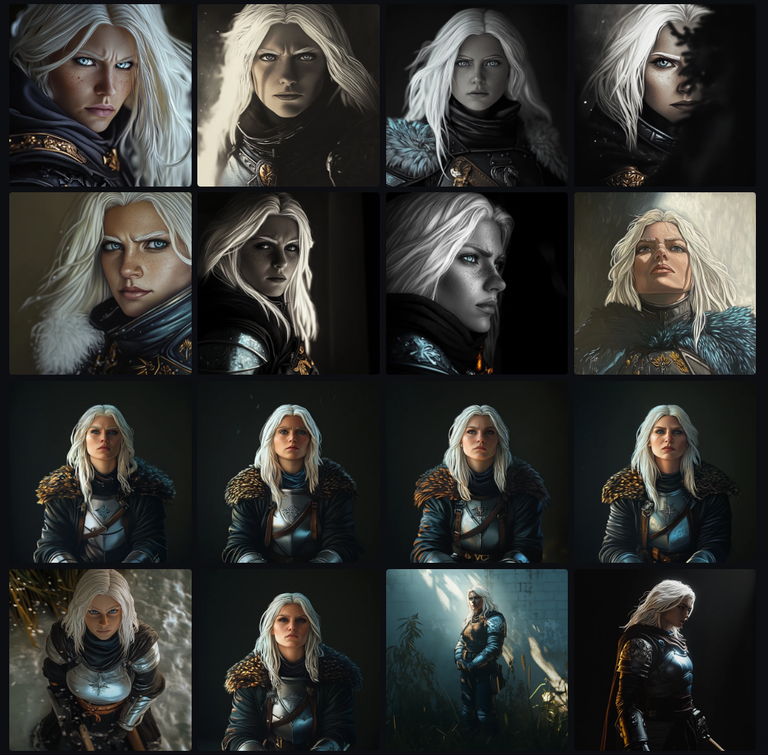

This took a lot of experimentation and I got some incredible images in the process.

In some of the prompts above you may see some parameters applied. The most interesting ones in my opinion are "--iw", which specifies the weighting the the scene image reference, and "--cw" which specifies the weighting of the character image reference. By using these correctly, you're able to maintain good atmospheric and character consistency required if producing a storyboard. However if you pay any attention at all you'll notice the consistency isn't perfect and both Avina and the Orc are changing their clothing a bit throughout the scene 🤣

With this part done, I just needed to create key images for the remaining scenes.

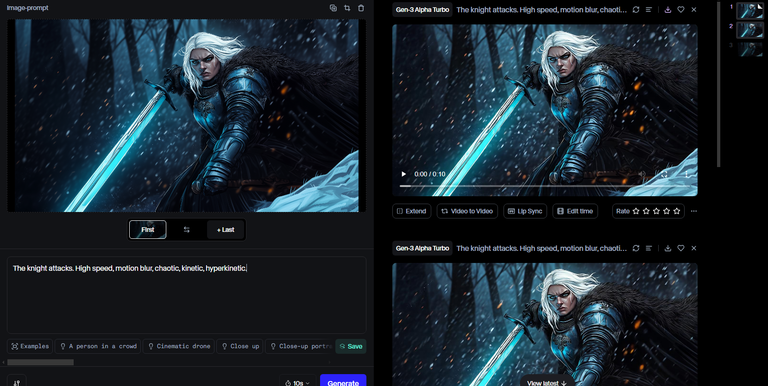

Image to Video

The next (and scariest) step was to take the storyboard into Runway to start animating the scenes. This is something I was totally unfamiliar with, and frankly I feel like I just scratched the surface and have a LOOOONG way to go before I get any good at this. It's not trivial at all, but it's certainly doable with patience and effort.

On Runway, I used the Generative Video option with their Gen-3 Alpha Turbo model and inserted the scenes one by one.

One very important learning was when doing image-to-video in Runway you do not need to re-describe the image itself. You should only describe the camera style (what shot you want, and how it moves) as well as what motion you expect from the characters in the shot.

I took me ~100 exports to get the videos I wanted. Sometimes Runway would hallucinate and do really weird stuff with the videos (like it kept adding a random wolf into one). Others it just wouldn't look right (getting those orcs to walk properly took 10 tries, and the final one still wasn't great). Other times it just didn't seem to quite do what I wanted so I needed to finetune my instructions and keep experimenting.

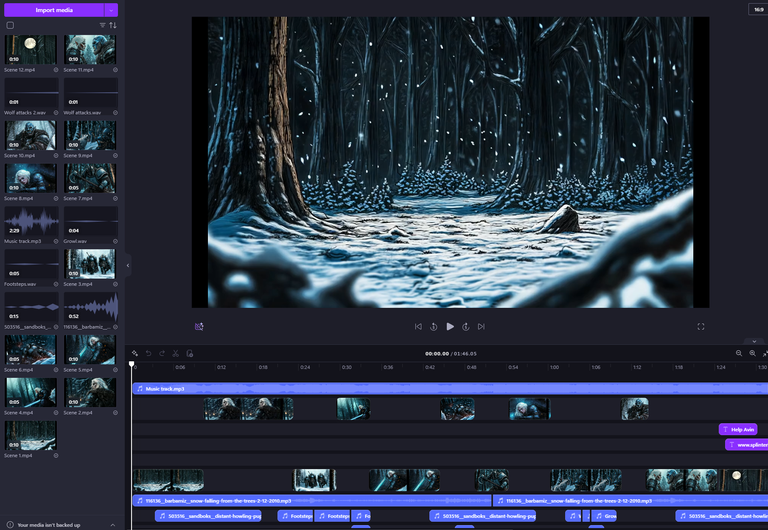

Stitching things together

Finally once I had reasonable videos for each scene in my mini-film, it was time to stitch it together. For this part I picked a very simple tool rather than a state-of-the-art one, partly because I was too tired to figure out Davinci and partly because I didn't want to spend a ton of money on Adobe Premiere 😅. Clipchamp did the job as it provides a very simple, no-frills interface for putting together video, image, sound, music, and text assets together.

As I said before, the entire process took me somewhere around 3-4 hours. I could've taken much more but I had some prior experience with several of the tools here, and also had a gameplan that I stuck to. I didn't strive for perfection but rather for completion, as I wanted to get through all the motions once so that I can understand the entire workflow before starting my next project.

I hope you found this helpful and/or entertaining, and I look forward to offering you more (and better) videos in the future.

AI is insanely creative. This is really cool from just a few hours of work. You have skills. Any more ideas on the horizon?

Yes, plenty more coming...stay tuned :)

Dude this is crazy cool!

I am shocked at how fast we went from AI Text... to AI Image... to AI Sound... to AI Video...

It's pretty crazy how quickly things have developed...

Yeah it's absolutely insane. But what I love most about it is how much it EMPOWERS us. We're still in control (for now!).

For now 😁😅

Damn, that's pretty fantastic.

I really have to dedicate more time to getting into this stuff!

Thank you!

Tofu, you are killing the AI game! Love seeing the full documented process & of course the final product is just badass. Well done hombre!

Thank you TPG! Love that you enjoyed it, and hope you'll get a kick out of the next ones.

Simply beautiful !BBH

Thank you so much! Really appreciate it!

this is absolutely amazing Brave!

Thanks AZ! I've got more coming!

@bravetofu! @day1001 likes your content! so I just sent 1 BBH to your account on behalf of @day1001. (1/1)

Thanks for sharing! - @azircon

So creative, I love it.

Excellent! very good work.

!PIZZA

It’s impressive how you took the leap into AI-generated content with your Splintershort! The combination of tools like ChatGPT, Midjourney, Runway, and Clipchamp to create a mini-film is inspiring, especially considering your lack of prior video editing experience. The blend of Splinterlands characters with AI-generated storyboarding and animation adds a fresh layer to the creative process.

Your process of iterating images in Midjourney to get the desired visual consistency shows real dedication. The way you used parameters like "--iw" and "--cw" to maintain consistency between atmospheric and character references, even if not perfect, is a great insight into refining AI-generated art.

The challenge of animating the scenes using Runway also seemed quite intense, especially with the hurdles of getting specific motions right, but your perseverance in fine-tuning and exporting the videos a hundred times shows real commitment.

Finally, stitching everything together in Clipchamp after experimenting with various video editing platforms was a smart choice, showing that even simpler tools can get the job done efficiently.

This experiment demonstrates the incredible potential of combining AI tools in creative projects. It's exciting to see how this will evolve as you refine your skills and explore more complex projects. Looking forward to seeing what comes next!

$PIZZA slices delivered:

@danzocal(3/10) tipped @bravetofu