Anti-abuse vs Censorship: An exploration of Hive Politics

One of the most persistent tensions on this social blockchain of ours is the one between “Anti-abuse” efforts and “Censorship-resistance” proponents.

Hive is a self-governing entity, thus the politics surrounding this tension are technically a responsibility for every stakeholder—and ultimately “decided” by the Witnesses we elect.

The tension can be summarized as follows:

- Blockchain tech and Hive culture is founded on principles of censorship-resistance

- But left unchecked, this privilege can (and certainly will) be abused

- How do we define abuse, how do we curtail it, and how much anti-abuse is too much?

Point 3 is the crux of the political puzzle. I don’t have answers, but this article will attempt to unpack some concepts and things to keep in mind when thinking about the problem. Let’s dive in.

A working definition of ‘Anti-social risk’

Point #2 above states:

left unchecked, this privilege can (and certainly will) be abused

I think most of us would agree. Spend any time in a financially incentivized system here in the Web3 world and you know—you just know—that eventually some opportunist will come along looking for an exploit, a loophole, a hack, etc.

But this happens in broader societies too. Scammers, liars, bullshitters, snake oil salespeople… There are also the angry, the outcast, the clinically anti-social. Negative energy that causes emotional or literal harm to a society. Much of it is systemic and not the work of particularly evil antagonists (though those people do get a disproportionate amount of attention).

Hive is absolutely not immune. In fact, due to the promise of “censorship-resistance” we will likely attract more than our fair share of folks that “normal” society has already shunned due to anti-social behaviour.

For the purposes of this article, let’s label all this “negative energy” as “anti-social risk.” That is: behaviour or people that pose a risk to a healthy society.

The political puzzle becomes: to what degree do we accept this systemic anti-social risk?

Rather than try to arrive at some perfect answer, I think it’s helpful to remove bad answers first.

I will argue that we cannot operate on the extremes, and can eliminate two bad answers:

- Zero-tolerance for anti-social risk AKA maximize anti-abuse AKA “nanny state.”

- Full-tolerance for anti-social risk AKA zero anti-abuse AKA pure anarchy.

Zero-tolerance flies in the face of censorship-resistance. It just can’t and won’t happen on a decentralized tech platform like Hive. Not even Facebook is pure zero-tolerance… so there’s no point in even thinking about this outcome.

Less obvious may be the untenable extreme of Full-tolerance.

The Paradox of Tolerance

Full tolerance for anti-social risk (anarchy). Let the exploits run wild, let the scammers scam, allow hate-speech, police nothing. Put all the weight of risk management onto individuals and none of it on “society.”

This tends to convert society into a fiefdom where it is ruled by whoever swings the biggest stick. Temporary emperors, constant strife, lots of trampled citizens. Good times for those who have access to power (until they don’t). Bad times for everyone else. If Hive is to grow and be prosperous, it needs to have a positive impact on a lot of users. The anarchist reality is a terrible sales pitch, being that it is a good experience only for a few whales. The vast majority of folks would just leave for anything marginally better.

There’s another term for this outcome—The Paradox of Tolerance.

From Wikipedia, emphasis mine:

The paradox of tolerance states that if a society is tolerant without limit, its ability to be tolerant is eventually seized or destroyed by the intolerant. Karl Popper described it as the seemingly self-contradictory idea that in order to maintain a tolerant society, the society must retain the right to be intolerant of intolerance.

Balancing tolerance & minimizing discontent

Intolerance of intolerance: this is a catchy idea that I think is incredibly important.

It means that even in a censorship-resistant society, there needs to be some degree of anti-abuse. We can’t turn it up to maximum, but we also can’t let it slip to zero. Ideally, we want to find a balance that minimizes the amount of users that think “I don’t like this!”

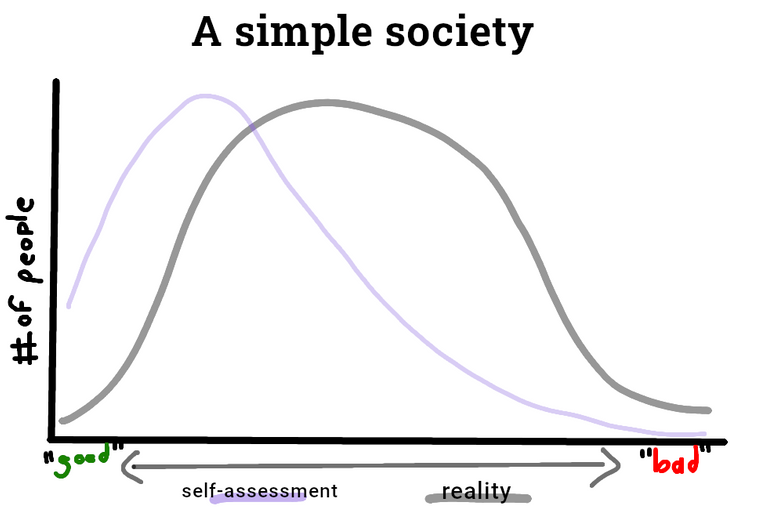

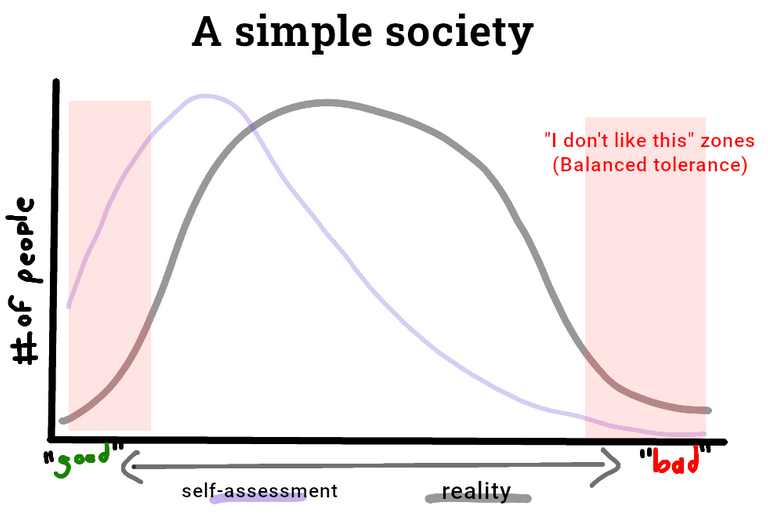

Visually, here’s a simplified society chart, filled with lots of people who are on a spectrum of “good” and “bad,” where:

- “Good” means they are pro-social, selfless, want to see others succeed, want to see Hive succeed, are generous, generally positive, blah blah blah.

- “Bad” means they are anti-social, selfish, only care about their own outcomes, don’t care about Hive long term, are rude, generally negative, blah blah blah

- For fun, I’ve included the idea that most people will likely overestimate their own “goodness” relative to the actual average, a well-known phenomenon in psychology

Above, we see that most people are a mix of “good” and “bad.” On the extremes, we have a few really wonderful “saints” and some truly evil “demons.”

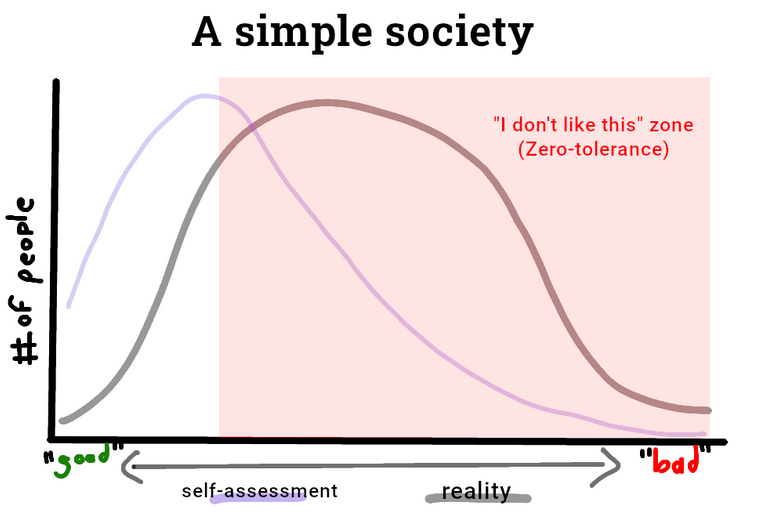

In the untenable zero-tolerance situation, where we have “nanny state” with tons of rules of what you can and can’t do, most people don’t like this. Sure, you have totally wiped out all the demons… but along with that the majority of normal folks aren’t happy. This effect is made worse because in Web3 and on Hive, we expect a large degree of permissionlessness, censorship-resistance, etc.

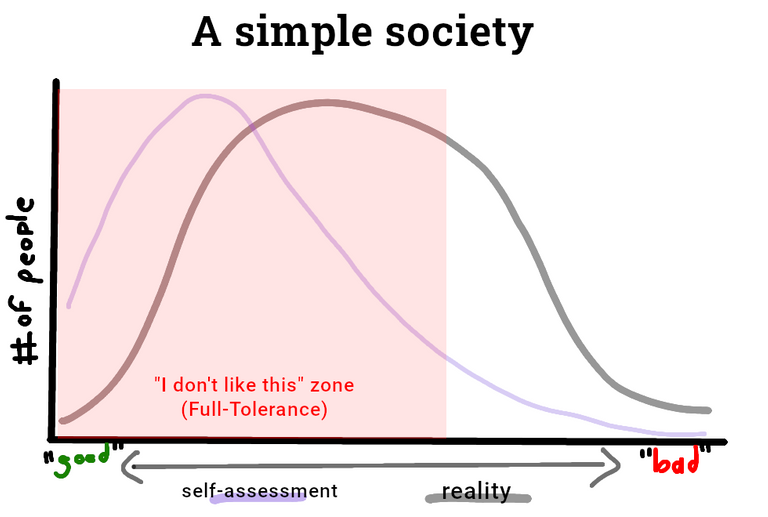

Full tolerance has the opposite effect, which I’d argue is worse. All the very good and great people are unhappy, the majority is unhappy, and the chaos caused by letting the demons run free even upsets those who lean a little “bad.”

Political decisions and policy that find a way to appeal to the majority tend to be most efficient. Yup, there will be some upset “saints” who can’t handle anything but a strict, rules-heavy world. But we also nail the majority of the “demons” and their destructive anti-social behaviour.

Censorship-resistant doesn’t mean Consequence-free

While there isn’t an easy solution, this argument does strongly favour the existence of some rules and tools to counter abuse. Our anti-abuse dial is set somewhere above zero, just not cranked up too high.

The big questions are:

- What constitutes “abuse” and,

- How is it reliably measured?

- What consequences are appropriate and how do we keep them proportionate?

- Can or should some of this be built into the code?

- How do we amend or adjust these things over time?

- How does Hive come to an agreement on the above?

🤕 What constitutes abuse?

We need not define this from scratch. Web3 shares much of its DNA with the Internet landscape from whence it came. There’s a rich history of fraud, exploits, and other malicious behaviour that could be used as a template from which to apply to Hive.

Example: Start with this extensive list and rate each on how deeply they apply to Hive.

📐 How do you reliably measure abuse?

The open and transparent nature of the chain should actually make this problem easier than on Web2. Granted, many forms of abuse and scams take place in communities peripheral to Hive (e.g. Discord)... but even so there should be ways to programmatically track, analyze and ID patterns of abuse with the right tech.

Easier said than done, of course. Not only do you need to build the thing, but it needs precise definitions of “abuse” in order to produce a meaningful output for analysis.

⚖ What consequences are appropriate and proportional?

I think proportionality is easier to solve, assuming the definitions and measurements are solid. You can also test it over time and increase or lessen harshness based on results.

But what exactly are the consequences?

Today we have downvotes at the core, and a muting system on some frontends. These are both punitive measures, aren’t actually “censorship,” and can work to some degree (but can also spiral out of control in others).

There are also softer “social” consequences—known abusers won’t get invited to work on projects, they’ll be harried off of Discord servers, many curators will avoid them.

Given the limitless possibilities of the tech and code, more sophisticated punitive, social, or even negative- or positive-reinforcement techniques surely can be conceived and tested. Here’s a sample of such a conversation, exploring the idea of stake-negation or “anti-delegation”

👩💻 To what degree should anti-abuse be code-based?

Currently, anti-abuse measures are almost wholly managed by people, with maybe some help from scripts. I’m sure some of the more serious vigilantes have built custom tools to enhance their pursuits—but I’m talking about the Hive base code here.

Could there (should there?) be more anti-abuse tools beyond the simple downvote button (or say, withholding or removing your witness vote)? Built in reporting, self-upvote monitoring, mod tools… this path can be a slippery slope to twisting the anti-abuse dial too far though. Many people remember “the good ole days” of Reddit before they got bit by the centralized corpo bug and started dealing in heavy-handed policies and empowering mods who then went on to abuse their “anti-abuse” tools. Not a fun path.

⚙ How do we amend or adjust these things over time?

Policy must change over time, because, well, times change. In order to be a competitive force in the larger world, Hive absolutely must stay agile and unmired in any sense of a “the way we’ve always done it” mindset.

How often should the above ideas be revisited? Is the frequency itself based on a dynamic metric? Do we commit to a schedule?

🤝 How does Hive come to an agreement on the above?

Part of the challenge is the double-edged nature of Hive’s decentralization. If we had a centralized “executive team,” they could lock themselves in a room for a week and emerge with a plan (and hopefully execute it).

But we don’t, and because of that we have many, many advantages.

But some disadvantages too: like coordinating around, answering, and executing any of the questions above.

Ultimately, the Hive Witnesses determine what gets baked into the code of Hive.

How much political pressure and responsibility should Witnesses bear? Surely more the closer to the Top they are, due to having literal control of how the chain operates, right? But how do we hold them to account on that?

Like any political system (the one in your own country included), apathy in your own civic duty will lead to the answer being: “we can’t hold them to account.” The only real way to do it in this delegated proof-of-stake / decentralized system is to:

- Notice which Witnesses are committed to listening to the “society”

- Use your Witness votes judicially—research, and expect two-way communication

- Use your personal influence to get others to do the same

- Have conversations about these political questions!

Barring any swaying of the Witnesses to enact code-level changes to anti-abuse systems, we do have another, totally viable path. Indie developers and community leaders still must adhere to the baseline systems of Hive. But apart from that, they often have more centralized control over their systems.

Frontends like Leo Finance, Peakd and Ecency command huge amounts of social attention and energy. If good, balanced anti-abuse systems are built into the tools we use to interact with the chain, then (per the charts above), folks will gravitate towards using them over places that get the balance wrong.

To me, this is the most likely way forward compared to getting Hive core to change too much. Hive core provides the decentralized foundation, and fully- or quasi-centralized projects build the digital society-experiences on top of that.

The Main Takeaway

This post was long, but it’s a meaty subject! To boil it all down into a key takeaway is tough, but I would say it’s this:

Any functioning society that wishes to grow (e.g. Hive) must enact a good balance of anti-abuse policies. You cannot have zero, because then you devolve into an ugly fiefdom. You cannot push anti-abuse to the max, because that simply doesn’t work in Web3.

Thanks for stopping by and reading. The hero image at the top was made with a Canva Pro License and uses the Leo Finance logo.

Posted Using LeoFinance Alpha

I think Hive is set up well in the sense that, cheats who get caught essentially stealing the reward pool from genuine people are policed, it's a hot button issue on whether they're good or bad, I think they're neccesary, otherwise we would be filled with junk content and as a whole it wouldn't be a good place to be.

The social side of this blockchain is then regulated by the people. We shouldn't have someone enforcing rules about what someone can say, or post about. It's good that people on an individual level can have a run in with someone they don't like and then just downvote eachother.

In fairness, the downvotes are what keeps people in-line. I haven't ran across many trolls here, like there are just about everyone else online, mainly because of the reputation system and most people not wanting to attract negetive attention and ruin their chances of building their account and assets.

!PGM !LOL !LUV !gif cheers

Via Tenor

Is that team not made up of 20...

You speak of the Top Witnesses? They are far from a team, haha.

!PIZZA

Lots of interesting thoughts!

$PIZZA slices delivered:

@treefrognada(1/5) tipped @jfuji

Erm... By chance... ¿Have you ever heard the story of the 5 Monkeys Experiment?